We live in the information age, an age where we have the opportunity to be "data driven" by measuring and tracking everything. For those who design and develop digital products, there are many ways to determine how well we've succeeded. Those who want to understand if a product meets customer needs and goals may perform usability tests and other activities, largely qualitative in nature. Yet the business often wants a hard and fast number. We toss out acronyms including NPS, CSAT and CES. We refer to the "voice of the customer" when making business decisions.

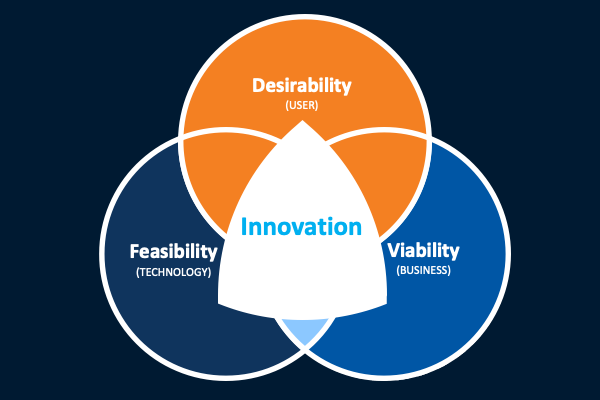

Qualitative and quantitative research needs to be thoughtfully and consistently applied. As the Venn diagram describes, the "voice of the customer" is not always represented in many business roadmaps, so I want to tell the story of setting up a process of collecting customer feedback in a large enterprise setting. There are so many approaches an organization could take, but I want to call out my approach, with a few influences in mind. First, I do not buy into "one number to rule them all." I caution organizations against substantiating their success without taking into account context. Here the qualitative data has more value because it explains the quantitative data. In fact, some argue against even calculating a score because the real value lies in the follow up question. It's more important to understand the why.

It was only very recently I was given a greater opportunity to have a voice in creating enterprise-level customer feedback loops. Yes, I say a loop, because all too often businesses do not thoughtfully consider how to close that loop. Or they collect too much feedback in a gluttenous and untimely way. I have been influenced by Tomer Sharon's approach to customer feedback, particularly by heeding his three recommendations:

Over the last few years, we've made strides initiating thoughtful customer feedback at CMS. We support an IT group comprised of dozens of contracting companies, all at various stages of maturity. And CMS leadership has to rely on whatever data a contracting company may be collecting to have an idea of the success of the products and services they deploy and support.

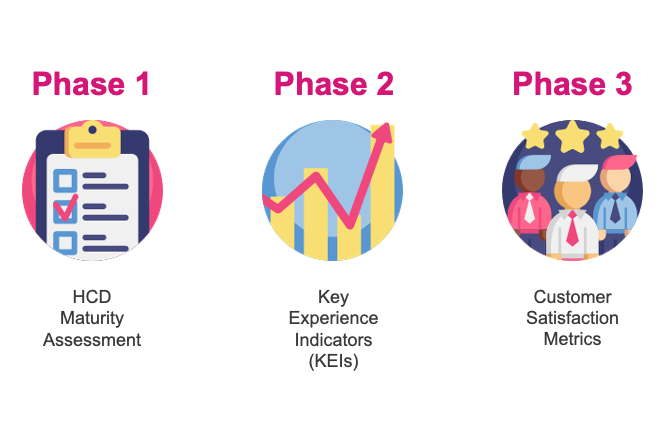

I wanted to change that by setting up consistent data points across the enterprise that could provide actionable intelligence for CMS IT leadership and the contracting companies responsible for the design, development and deployment of specific systems. Along with my team, we defined a three phased approach, including:

Quite honestly, there are no specific results to speak to since much of this initiative is in process. Right now I am operating with a number of hypothesis. That is, I am working with an organization who is not systematically collecting customer feedback, so I am establishing baselines with the intent that we can identify trends so we can then make course corrections in the way poducts and services are designed and delivered. And I am not fixated on any one data point or collection measure. I work in an organization where we preach continuous improvement, so if one method does not give us important business decision data, then we will adapt. Regardless, I am quite proud that I have been able to guide a team to get these important feedback loops established.